What is A/B Testing?

A/B testing, also known as split testing, is the method of taking two similar, but different, campaigns and displaying each to an audience to see which performs better. As Optimizely explained it in this great article, A/B testing essentially is “an experiment where two or more variants of a page are shown to users at random, and statistical analysis is used to determine which variation performs better for a given conversion goal.”

Why is A/B Testing Important?

A/B testing is incredibly important, as it always ensures you are optimizing your content and using your resources in the most efficient and effective way possible, increasing your ROI and helping reach your brand’s goals. Without A/B testing, you are essentially making guesses and hypotheses about what your consumers want to see, and what causes them to react in a way that is most effective to your brand. Without data and statistics backing these thoughts, they’re just thoughts.

Okay… I already A/B tested my campaign and found a winner. I’m done now, right?

WRONG!

It’s easy to think that as soon as you hit your desired goal or ROI, you should keep the campaign that got you there and halt all testing, because why change something works and hits your goal?

Well, eventually the winning campaign will no longer be relevant. Your audience will continue to see the same thing over and over and eventually, performance will drop.

Here at Exit Intelligence, we have seen this happen first-hand to some of our customers. After a conclusive A/B Test that happens to knock results out of the park, they decided to halt all testing. Sure enough, when testing stops, success rates begin to decrease in the second month of the same continuous campaign, which is why we always encourage continuous A/B testing.

Why doesn’t a winning campaign stay a winner?

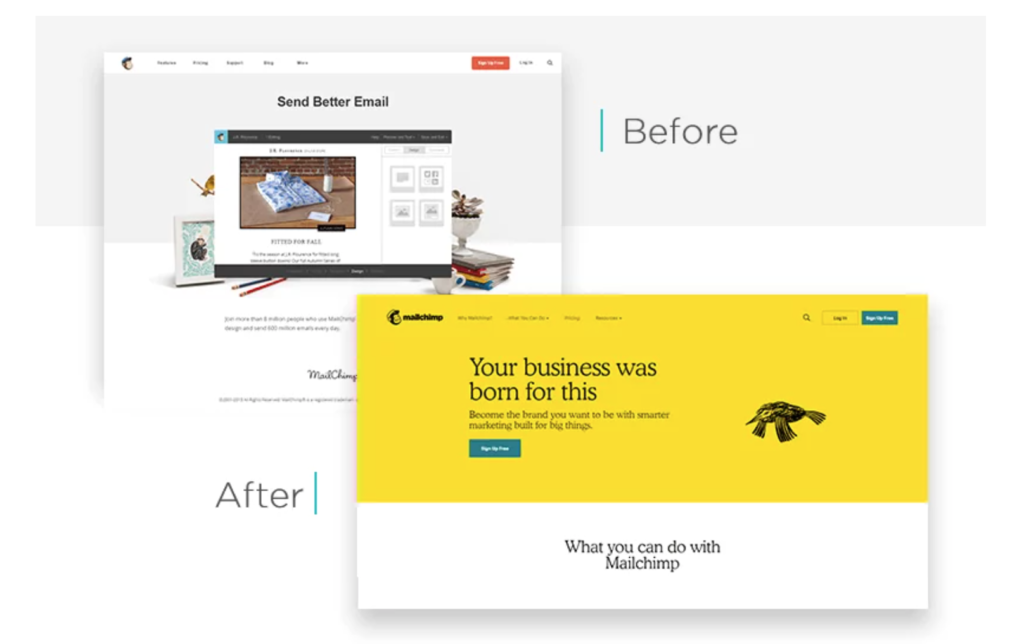

The same way your other marketing campaigns, such as website design, google ads, etc. don’t remain stagnant, popups shouldn’t either. They should be continuously optimized and tested as the other elements of your marketing campaigns are changed, to ensure there is congruence throughout your brand.

Some possible reasons why a campaign doesn’t stay a winner:

- Offers that don’t make sense any longer/ better incentives offered on your homepage

- Overlays that show on landing pages already asking for emails

- Brand theme/ Color changes

- Brand voice Alterations

- CTA button changes to the site

Pro-Tip: Basically anything that you’ve designed according to your site brand-guide, will need to be changed on your popups so your visitors don’t feel like they’re being handed to a 3rd party from back in time!

A/B testing is a conversion technique that never ends. It is something that you should be constantly doing and optimizing. You will never have a final “winner” because your other marketing campaigns are constantly changing, so you must match your popups to those.

I don’t want to test because I’m happy with current results and don’t want to risk them getting worse.

This is a completely rational fear for many marketers. You have a popup that is converting well, and starting a new A/B test could funnel your audience to an ad that might not convert as well, resulting in missed opportunities. We get that, but as said by Ellen DeGeneres, “It’s failure that gives you the proper perspective on success.”

Failure is just a roadmap to success.

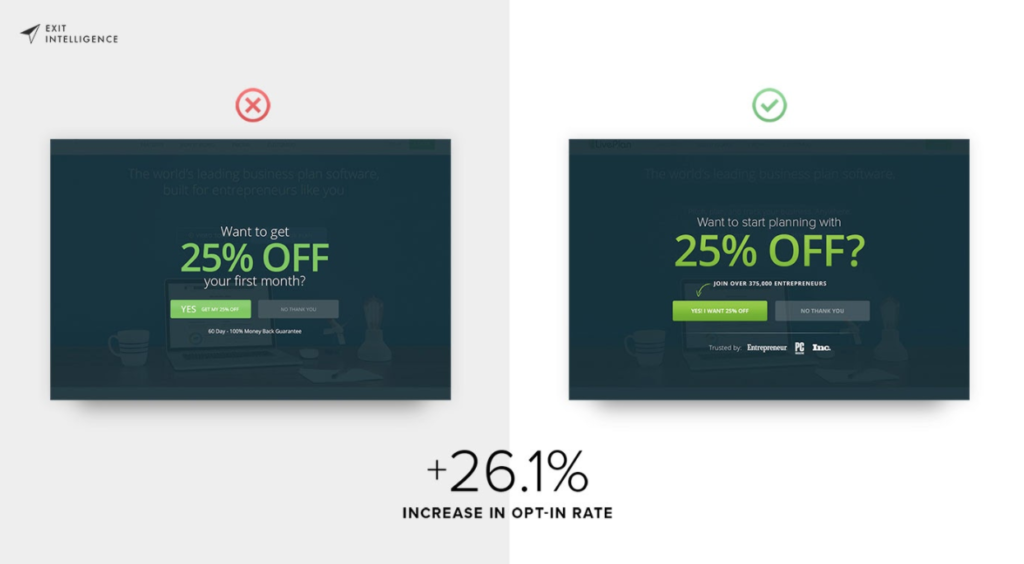

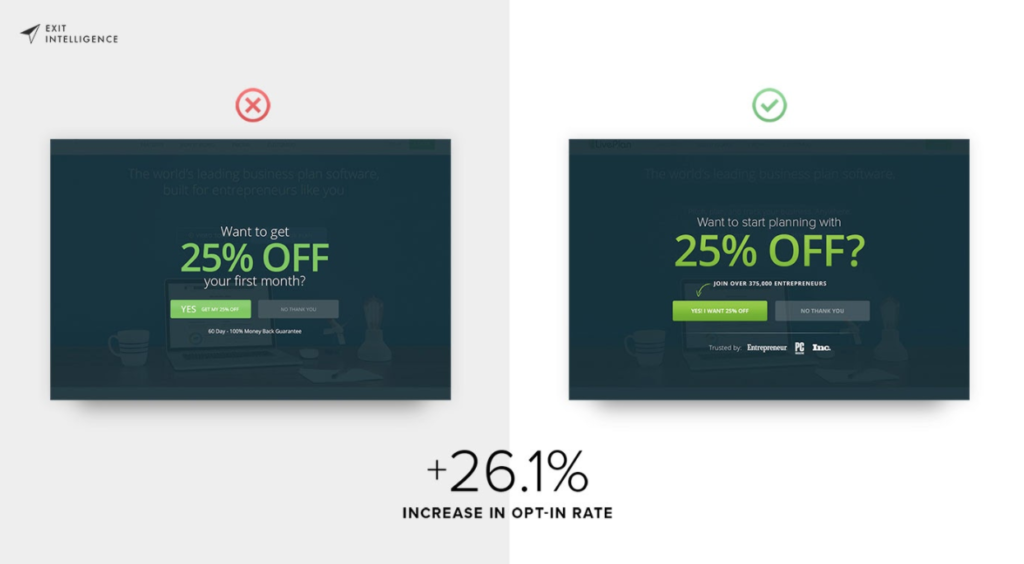

If you launch a campaign that performs terribly, you know what not to do going forward, which is arguably just as important as knowing what to do. Each time you identify a loser in a campaign, you learn from it and know what does and does not work for future campaigns. Additionally, as previously mentioned, results are going to worsen regardless of whether you continue testing and optimizing or not.

Not only are the winning and losing aspects of your campaign important to understand and know for future, similar campaigns, but you can also take these learnings and apply them to all other parts of your marketing. For example, you ran an A/B test with popups using two different tones of voice, and the playful tone was a clear winner. You should now consider using this type of (or even the exact same) language in your Google ads, social media posts, website headings, etc.

How often should you analyze test results?

Constantly! A/B testing is only beneficial if you have someone who is constantly analyzing the results. If you set a test and just allow it to run on its own with infrequent check-ins, you may have a campaign that is drastically underperforming and therefore, losing out on lead capture. Constantly check-in on your tests to be ready to choose a winning campaign.

Lucky me, I found a winning campaign after only a day!!

Hold up… You should never declare an A/B test winner after only a couple hours, or even a day or two. In order to ensure your results are accurate and your sample audience is congruent with your true audience, you should allow your campaigns to run for at minimum a full week so you can involve both weekday and weekend audiences. At Exit Intel, we recommend declaring a winning popup after at least 5,000 people have been served one of the two ads, and the ad has run over 2 weeks to get an accurate sample audience.

Pro Tip: Don’t want to wait to test a variable? No problem! Copy your campaigns and segment where they run, to test multiple variables on different areas of your site. Be sure to use data like GA to know what your visitors are looking for, and don’t segment too much of your traffic so that you never reach a conclusive result on your main test!

Let’s think about testing in regards to revenue

When it comes down to it, what are you losing by not continuously A/B testing? Maybe you’ll miss out on a few leads if you test a campaign that doesn’t do well, sure. But by optimizing and fine-tuning your winning campaigns, you will make up for those missed leads tenfold. If you’re not testing, you’re not innovating. You’re not learning about your website visitors or new trends or what types of incentives work best for your brand.

For example, your brand sells kayaks – both hard plastic ones and blowup ones. You A/B test a popup on your site that is exactly the same, except one variation displays an image of the hard plastic kayaks and the other displays a blowup kayak, and the latter popup performs significantly better. This gives you a better understanding of what your audience is looking for and tells you that you should focus more effort on marketing your blowup kayaks than the hard plastic ones.

Similarly, you should be testing various incentives to see which encourage your audience to convert most efficiently. You could run an A/B test that promotes a downloadable ebook vs. free shipping, to understand what your audience prefers. Do they want content? Does price matter? It is important to keep in mind that this preference may change over time, thus another reason to continue testing.

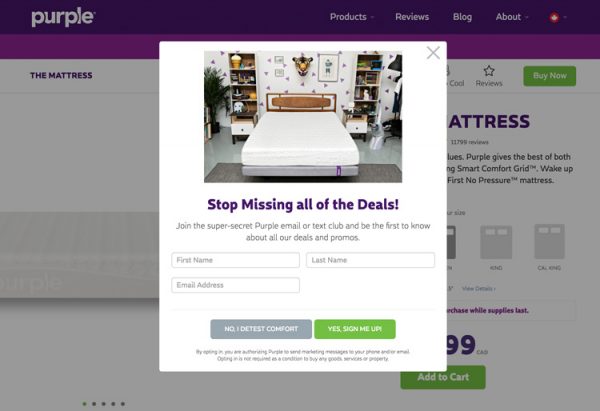

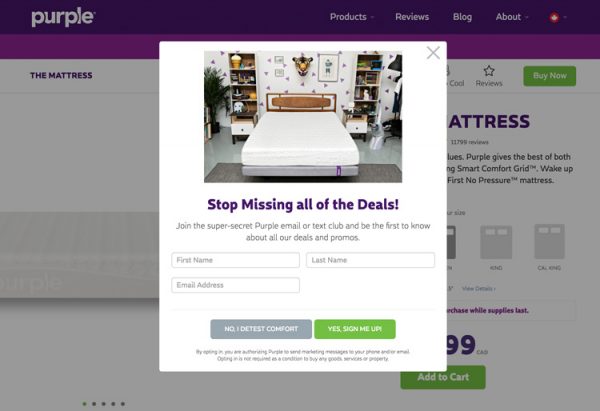

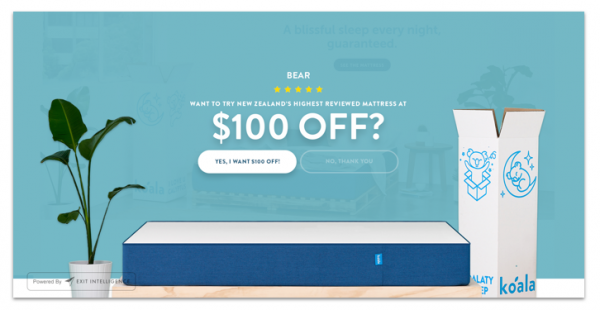

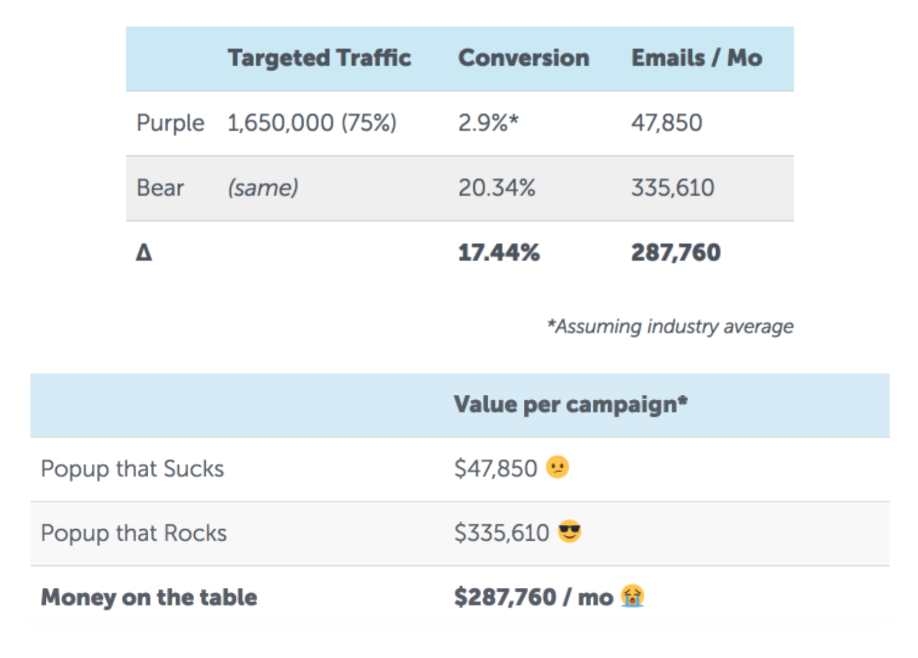

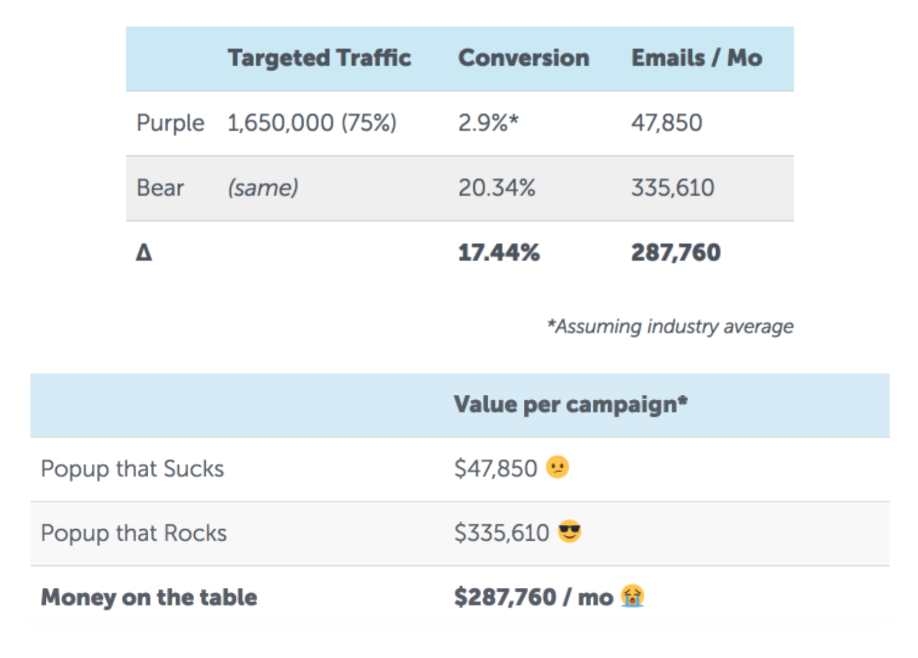

Let’s look at these popups from Purple (right) and Bear (left). Purple is asking people to subscribe to their newsletter. Bear is asking visitors if they want $100 off a mattress on a popup that has clearly been A/B tested and optimized. The value of Bear’s campaign, even though they are offering a large discount, is over 7x more valuable.

Testing is all about goal setting and augmenting your brand, making decisions that are backed by data and statistics.

Opt-In Rate Vs. Conversion Rate

When analyzing the results of A/B tests, you need to consider more than one factor before choosing a winner. When you A/B test a popup ad, you’re typically looking at which ad received a higher opt-in rate. However, you should also look at how this audience converted after opting-in. Maybe one of the popups received a 25% opt-in rate, but only 2% of the new subscribers actually bought something on your website, whereas the “losing” ad that received a 20% opt-in rate generated a purchase conversion rate of 15%.

Make sure you look at all aspects of your audience’s behavior when implementing A/B testing.

To summarize…

A/B testing is all about optimization and innovation. Without testing your marketing campaigns, you’re only using biases and hypotheses on what does and does not work. You need to back your speculation with data and statistics to truly create a campaign that converts and will reach your brand’s goals.

A/B testing can be stressful, time consuming, and confusing, but that’s why we’re here to help. At Exit Intelligence, we handle all A/B testing and optimization for you so you can sit back, relax, and watch the best popups bring in thousands of email & SMS subscribers.